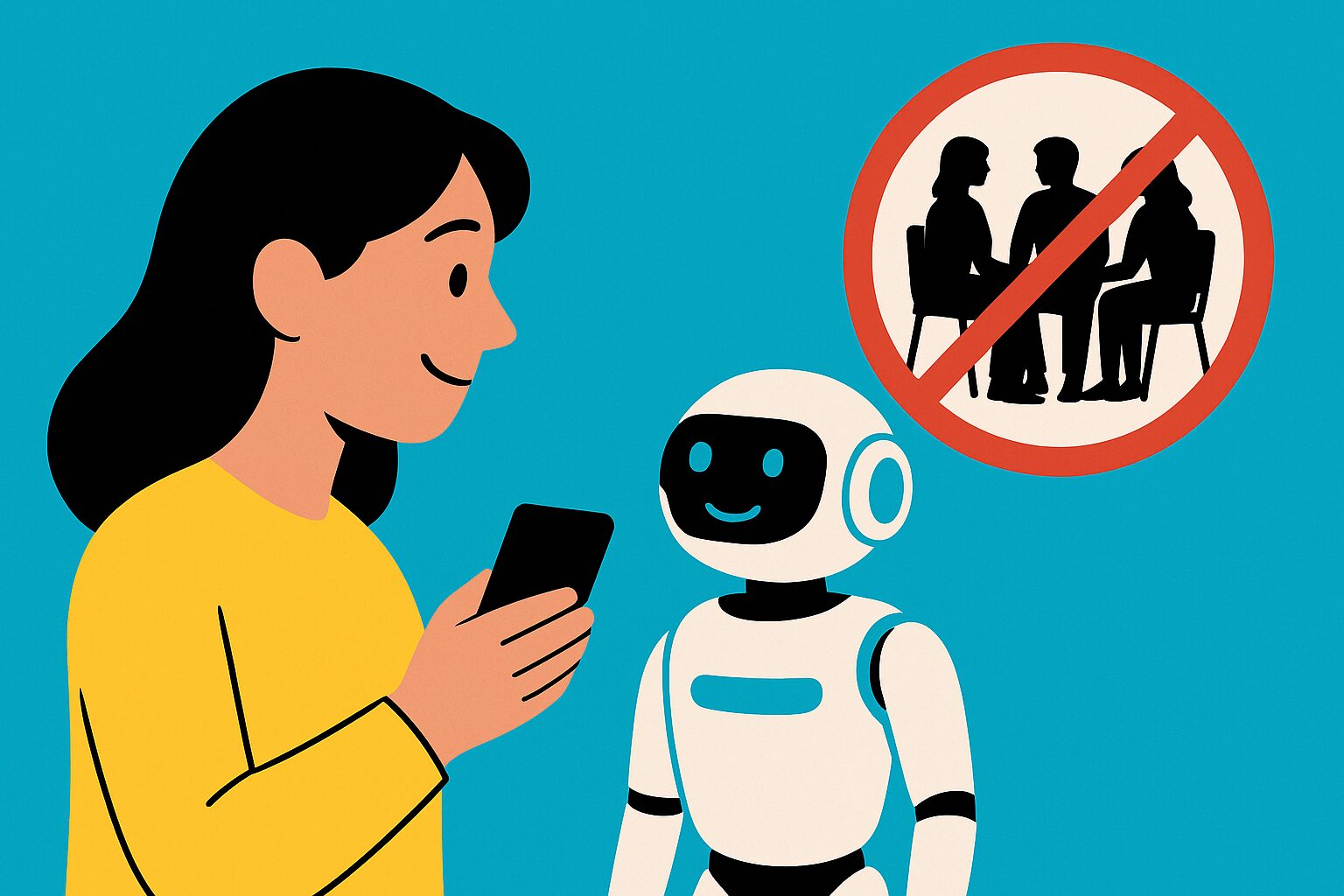

We hear it all the time: AI is smart, AI can help, AI can even be your friend. Reid Hoffman, LinkedIn co-founder, put it clearly: “I don’t think any AI tool today is capable of being a friend.” Zuckerberg disagrees, pushing AI companionship everywhere. That’s a hard stance to believe in. We have been burned before, remember social media? That is the reason to just slow down before we let AI blur the line between tool and human connection. This raises broader questions around ethical AI.

AI Companionship vs. True Friendship

Hoffman points out that friendship is a two-way street. It’s not just someone being there for you but it’s about you being there for them. That kind of give and take is what relationships consist of and make us human? No AI has that.

According to Reid: “Companionship and many other kinds of interactions are not necessarily two directional that’s the kind of subtle erosion of humanity.”

AI isn’t holding you accountable. It can’t cheer you on, call you out, or grow with you. At best, it’s a mirror, a yes man and a good one at that. A mirror isn’t a friend nor is AI.

We Have Seen This Before: Social Media

We rushed into social media without fully thinking it through. It started in 2009 with peer reviewed health studies. By 2017, Facebook admitted passive use could harm your mental health. By 2022 research linked social media with a 77% rise in severe depression and 20% increase in anxiety. In 2023, the Surgeon General said social media poses clear risks to youth mental health. What about AI and mental health? It took Meta 14 years to publicly say that Social Media cause mental health issues; it was too late because people have been affected; the damage was done. Now Zuckerberg wants AI chatbots to fill the loneliness gap. Maybe loneliness grew because social media replaced real interaction. Maybe this is déjà vu on a larger scale.

We Are Rushing Ahead Again: Jobs, Kids, and Emotional Bonds

What’s next? CEOs like Dario Amodei from Anthropic warn AI could take both entry-level and white-collar jobs. We have bots being tested as emotional tutors, companions, even babysitters. Even Google’s Gemini is getting marketed to “chat with.”

But what are we rushing past?

- Emotional mix-up: People might lean on bots instead of real human relationships.

- Skill erosion: If AI does your talking, socializing, thinking you lose practice.

- AI job displacement: entry-level to white-collar.

- AI tutor privacy concerns when bots babysit.

- AI safety standards overlooked.

- Safety & privacy: Trusting a bot with your kids, your data, your mental health?

We learned the hard way through social media. Studies took 14 years to catch up. Regulation only came after the damage was done. We should be smarter this time.

Slow Down, Test, Understand Establishing AI Safety Protocols

This isn’t about halting progress. It’s about pacing it. We need real-world pilot tests, transparent standards and questions like:

- Can this AI tool explain what it’s doing?

- Is it saying “friend, go talk to your real friends” like Pi assistant does?

- AI accountability mechanisms privacy, data storage, oversight?

- What happens to a child who thinks Alexa is their BFF?

Transparency standards and government safeguards aren’t buzzwords they are necessary.

AI Friendship Risks

Yes, AI has huge potential. But calling it a friend? That’s a road we should not want to head down. This is the similar mistake that was made when it came to social media: rushing into emotional territory without understanding the fallout. It took 14 years to see the hard it caused. Let’s not waste another decade on AI before we sort what friendship, empathy, and humanity should really mean.